Section: New Results

Plausible and Realistic Image Rendering

Depth Synthesis and Local Warps for Interactive Image-based Navigation

Participants : Gaurav Chaurasia, Sylvain Duchene, George Drettakis.

|

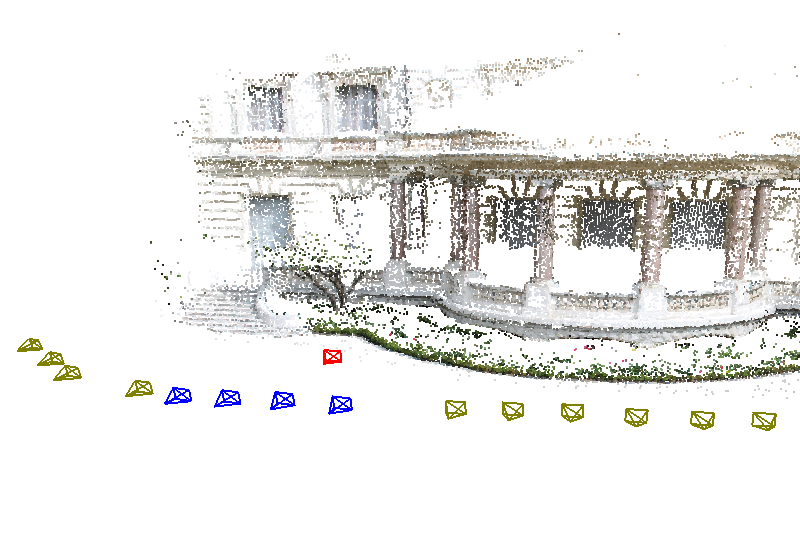

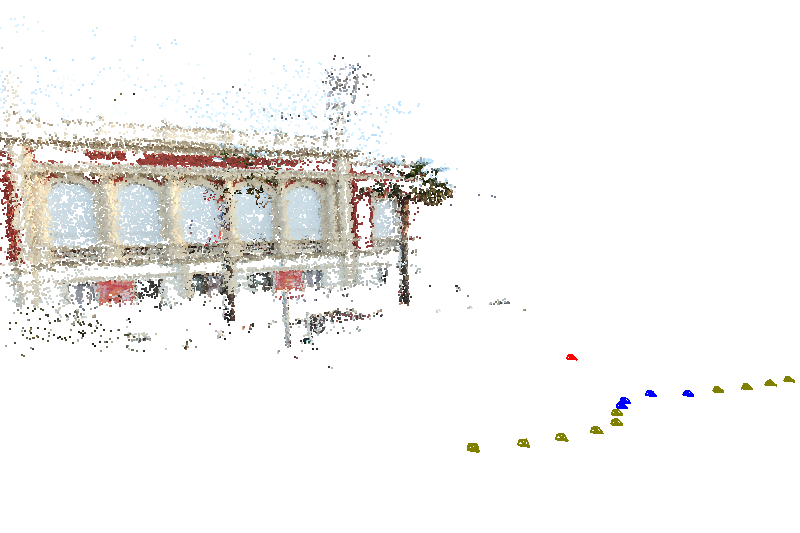

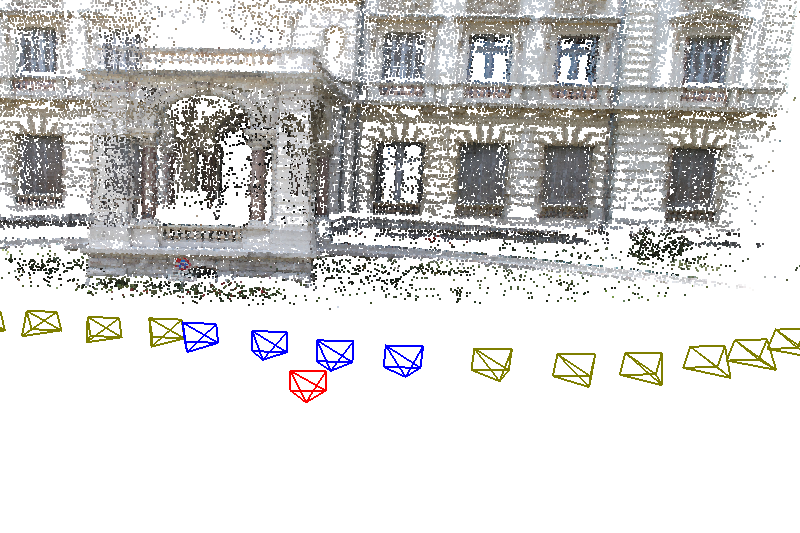

Modern camera calibration and multi-view stereo techniques enable users to smoothly navigate between different views of a scene captured using standard cameras. The underlying automatic 3D reconstruction methods work well for buildings and regular structures but often fail on vegetation, vehicles and other complex geometry present in everyday urban scenes. Consequently, missing depth information makes image-based rendering for such scenes very challenging. This paper introduces a new image-based rendering algorithm that is robust to missing or unreliable geometry, providing plausible novel views even in regions quite far from the input camera positions. The approach first oversegments the input images, creating superpixels of homogeneous color content which preserve depth discontinuities. It then introduces a depth synthesis step for poorly reconstructed regions. It defines a graph on the superpixels and uses shortest walk traversals to fill unreconstructed regions with approximate depth from regions that are well-reconstructed and similar in visual content. The superpixels augmented with synthesized depth allow a local shape-preserving warp which warps each superpixel of the input image to the novel view without incurring distortions and preserving the local visual content within the superpixel. This allows the approach to effectively compensate for missing photoconsistent depth, the lack of which is known to cause rendering artifacts. The final rendering algorithm blends the warped images, using heuristics to avoid ghosting artifacts. The results demonstrate novel view synthesis in real time for multiple challenging scenes with significant depth complexity (see Figure 3 ), providing a convincing immersive navigation experience. The paper presents comparisons with three of the state of the art image-based rendering techniques and demonstrate clear advantages.

This work was in collaboration with Olga Sorkine-Hornung at ETH Zurich. It has been published in ACM Transactions on Graphics 2013 [12] and presented at SIGGRAPH.

Megastereo: Constructing High-Resolution Stereo Panoramas

Participant : Christian Richardt.

|

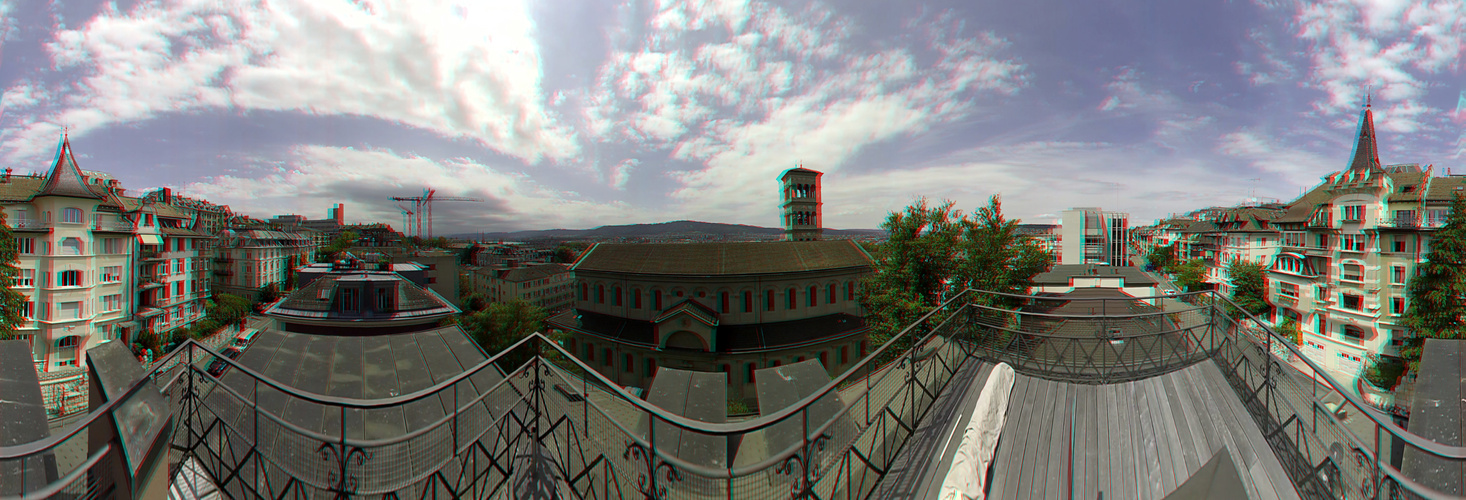

There is currently a strong consumer interest in a more immersive experience of content, such as 3D photographs, television and cinema. A great way of capturing environmental content are panoramas (see Figure 4 ). We present a solution for generating high-quality stereo panoramas at megapixel resolutions. While previous approaches introduced the basic principles, we show that those techniques do not generalise well to today's high image resolutions and lead to disturbing visual artefacts. We describe the necessary correction steps and a compact representation for the input images in order to achieve a highly accurate approximation to the required ray space. In addition, we introduce a flow-based upsampling of the available input rays which effectively resolves known aliasing issues like stitching artefacts. The required rays are generated on the fly to perfectly match the desired output resolution, even for small numbers of input images. This upsampling is real-time and enables direct interactive control over the desired stereoscopic depth effect. In combination, our contributions allow the generation of stereoscopic panoramas at high output resolutions that are virtually free of artefacts such as seams, stereo discontinuities, vertical parallax and other mono-/stereoscopic shape distortions.

This work was carried out in collaboration with Yael Pritch, Henning Zimmer and Alexander Sorkine-Hornung at Disney Research Zurich. The paper has been published as an oral presentation at CVPR 2013 [20] .

Probabilistic Connection Path Tracing

Participants : Stefan Popov, George Drettakis.

We propose an unbiased generalization of bi-directional path tracing (BPT) that significantly improves its rendering efficiency. Our main insight is that the set of paths traced by BPT contains a significant amount of statistical information, that is not exploited.

BPT repeatedly builds an eye and a light sub-paths, connects them, estimates the contribution to the corresponding pixel and then throws the path away. Instead, we propose to first trace all eye and light sub-paths, and then probabilistically connect each eye sub-path to one or more light sub-paths. From a Monte-Carlo perspective, this will connect each light to each eye sub-path, substantially increasing the number of paths used to estimate the solution. As a result, the convergence will be significantly increased as well.

This work is a collaboration with Frédo Durand from the Massachusetts Institute of Technology, Cambridge and Ravi Ramamoorthi from University of California, Berkeley in the context of the CRISP Associated Team.

Parallelization Strategies for Associative Image Processing Operators

Participants : Gaurav Chaurasia, George Drettakis.

Basic image processing operations have been optimized on a case-by-case basis such as prefix sums and recursive filters. Moreover, these optimized algorithms are very complicated to program because parallelization involves non-trivial splitting of the input domain of the operator. The target of this is to generalize the optimization heuristics of a generic class of associative image processing operators by developing an algebraic understanding of the operator and parallelization options. The algebra can transform associative operations such as box filters, summed area table, recursive filters etc. by splitting their domain to smaller subsets of the input image that can be executed in parallel and recombine the intermediate result later. The ultimate target is to develop a compiler front-end based on the Halide language that implements this algebra and is capable of parallelizing associative operators of arbitrary footprints by a few lines of code, thereby relieving the programmer of the tedious task for programming the parallelized algorithms. Such a compiler would allow programmers to easily experiment with a plethora of parallelization strategies in a systematic manner.

This work is in collaboration with Jonathan Ragan-Kelley and Fredo Durand of MIT and Sylvain Paris (Adobe Research).

Lightfield Editing

Participant : Adrien Bousseau.

Lightfields capture multiple nearby views of a scene and are consolidating themselves as the successors of conventional photographs. As the field grows and evolves, the need for tools to process and manipulate lightfields arises. However, traditional image manipulation software such as Adobe Photoshop are designed to handle single views and their interfaces cannot cope with multiple views coherently. In this work we evaluate different user interface designs for lightfield editing. Our interfaces differ mainly in the way depth is presented to the user and build upon different depth perception cues.

This work is a collaboration with Adrian Jarabo, Belen Masia and Diego Gutierrez from Universidad de Zaragoza and Fabio Pellacini from Sapienza Universita di Roma.